VR Visual & Auditory Load Task

Users' task is to press the trigger button of the VR conbtroller to indicate whether or not they heard and/or saw a dog or lion sound/3D object and heard and/or saw a car.

Aims:

To understand users ability to focus and ignore distractions up to a point where load exceeds perceptual capacity in visual, auditory & visual-auditory scenario.

Groups of participants:

-Controls

-People with ADHD/ADHD traits

Task Details:

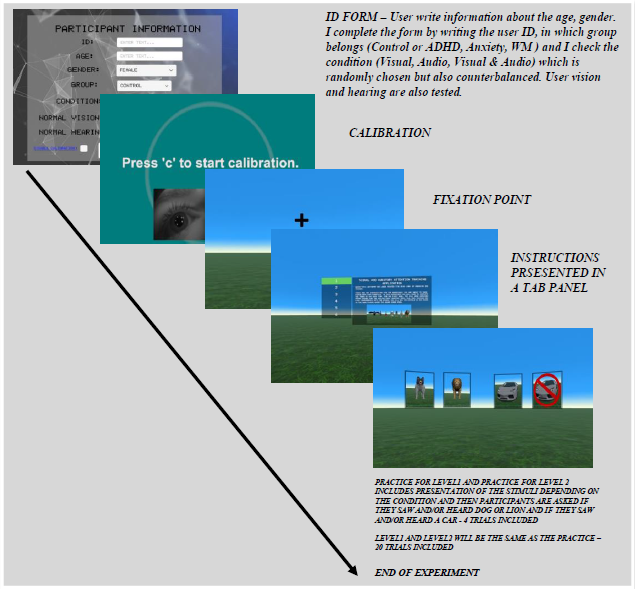

Participants were asked to complete one task with two levels. The first level had only one target, the second level had one target and five non target.

The targets were a dog or a lion (each of these occurred 50% of the time randomly presented). The non-targets were crow, chicken, cow, duck, rooster.

For each level a critical stimulus (car) was presented 50% of the time (25% when dog was presented and 25% when lion was presented) in a random order.

The VR application was developed with visual and auditory stimuli. There were three conditions of this task (1-visual stimuli only; 2-auditory stimuli only; 3-visual and auditory stimuli).

After each visual and auditory stimuli, four cards were presented in front of the user (dog card, lion card, car card and no car card) and the user had to click the cards with the trigger button of the controller of the VR headset. All participants completed the tasks with the three conditions. Task consisted of 20 trials each at the four task levels.

Before each level, participants completed practice trials that consisted of 4 blocks for each level. Visual stimuli were spatially separated and positioned randomly within a 90-degree angle of view.

Interaural amplitudes and time differences manipulated the position of sounds across the 90-degree space. Participants were exposed to each stimulus for 250ms with 10ms fade-ins and fade-outs.

The duration of stimuli in all conditions was adjusted to 250ms for audio and/or visual targets since visual targets required this exposure time. When AV stimuli were presented, auditory and visual locale corresponded.

Data Collected:

-User’s demographics information (saved into an excel file).

-Reaction Time (RT) to complete each level (saved into an excel file).

-Detection sensitivity (DS) of the critical stimulus in each level (saved into an excel file).

-Eye tracker VR Add on (Pupil Labs)

Hardware:

-Alienware m15 Gaming Laptop

-HTC Vive Pro

-Fovitec 2x 7'6" Light Stand VR Compatible Kit

-Pupil Labs

Software:

-Unity

-Unity Asset Store

-Blender

Programming Languages used:

-C#

-Python using Spyder in Anaconda

-Jupyter Notebook

-MySQL

-PHP API